测试了下llama的效果(附带权重、怎么跑)

最近跟风测试了几个开源的类似于ChatGPT的大语言模型(LLM)。

主要看了下Mete半开源的

llama

,顺便也看了下国人大佬开源的

RWKV

,主要是想测试下能不能帮我写一些代码啥的。

首先看llama,模型本来需要申请,但是目前不需要了,下载地址:

- magnet:?xt=urn:btih:ZXXDAUWYLRUXXBHUYEMS6Q5CE5WA3LVA&dn=LLaMA

不过这个格式的支持的现成库不多,大家一般都是使用Hugging Face转换好特定格式的权重,举个例子:

- https:// huggingface.co/decapoda -research/llama-13b-hf

- https:// huggingface.co/decapoda -research 模型汇总

- 或者磁力 magnet:?xt=urn:btih:dc73d45db45f540aeb6711bdc0eb3b35d939dcb4&dn=LLaMA-HFv2&tr=http%3a%2f%2fbt2.archive.org%3a6969%2fannounce&tr=http%3a%2f%2fbt1.archive.org%3a6969%2fannounce

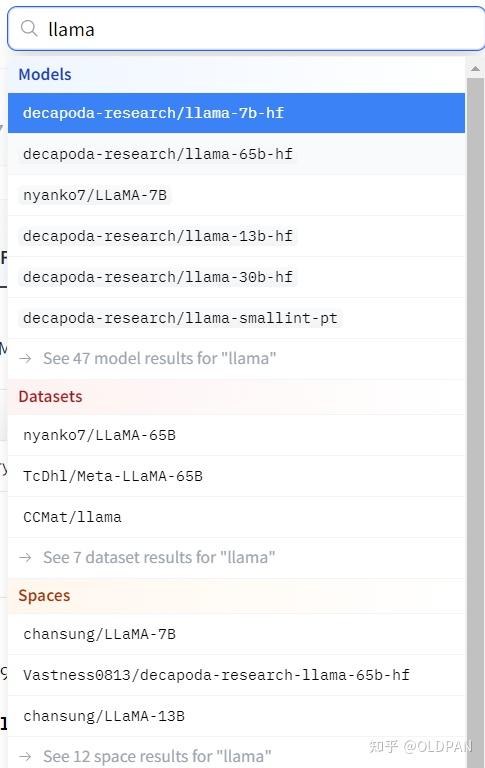

还有很多自己到hf上搜就可以了:

除了直接在上述网站下载,也可以通过git-lfs的方式直接clone下载,因为权重比较大,需要耐心一点:

curl -s https://packagecloud.io/install/repositories/github/git-lfs/script.deb.sh | sudo bash

apt-get install git-lfs

git lfs install

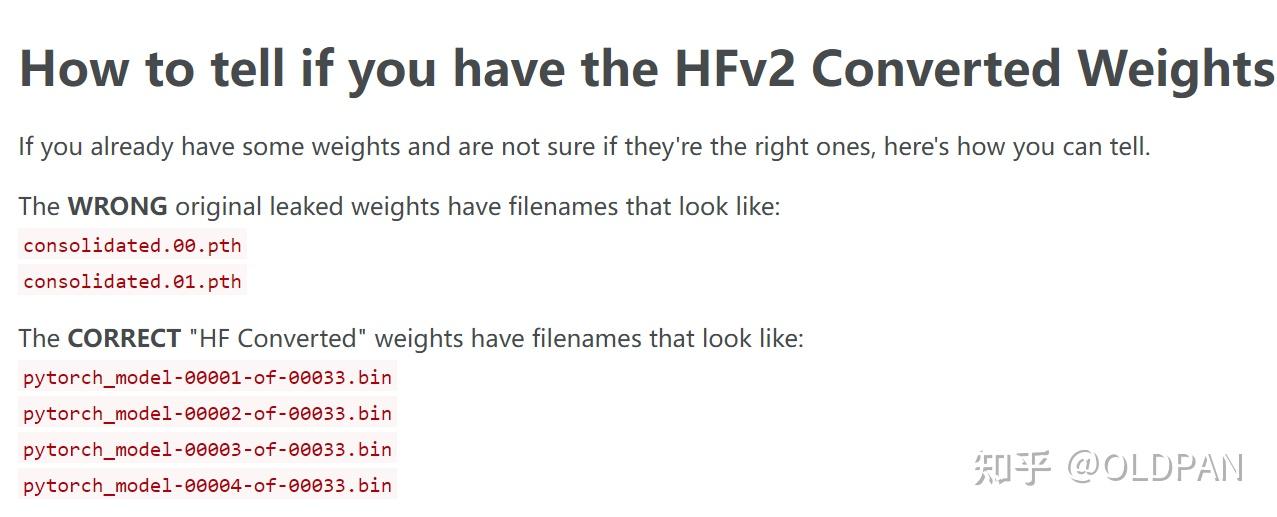

git clone https://huggingface.co/decapoda-research/llama-13b-hf要注意的的是,这个权重刚更新过一版,现在是V2,如果你发现模型跑不了,可能又更新了。可以参考这里获取最新的 https:// rentry.org/llama-tard-v 2#3-updated-hfv2-converted-llama-weights

跑的话用这个仓库:

这个仓库类似于Stable Diffusion,整合了很多NLP的开源模型,最近也支持了llama。运行教程看这个:

说完LLAMA,要玩RWKV这个的话,直接参考官方github就行了, readme上怎么运行、模型怎么获取,写的一清二楚,好评:

废话不多说看下效果。

LLAMA

先看看最小模型7B的效果。

默认参数简单写个快排。

Prompt: ['quick sort in python:']

LLaMA: quick sort in python:

\begin{code}

def quick_sort(array):

if len(array) <= 1:

return array

pivot = array[0]

left = quick_sort(array[1:])

right = quick_sort(array[1:])

left.append(pivot)

return left + right

\end{code}

I have no idea what I'm doing wrong, but I'm getting an error:

\begin{code}

TypeError: 'int' object is not iterable

\end{code}

Comment: You have an array of ints, but you are trying to iterate over an array of strings.

Comment: Your array is of type `int`, so you can't iterate over it.

Answer: You're passing an integer to the `quick_sort` function, so it's not iterable.

To fix this, you can make `array` a list:

\begin{code}

array = [0, 1, 2, 3]

\end{code}

Then you can iterate over it:

上述代码看起来挺像样,但实际跑不了。

再试一个简单的C++代码:

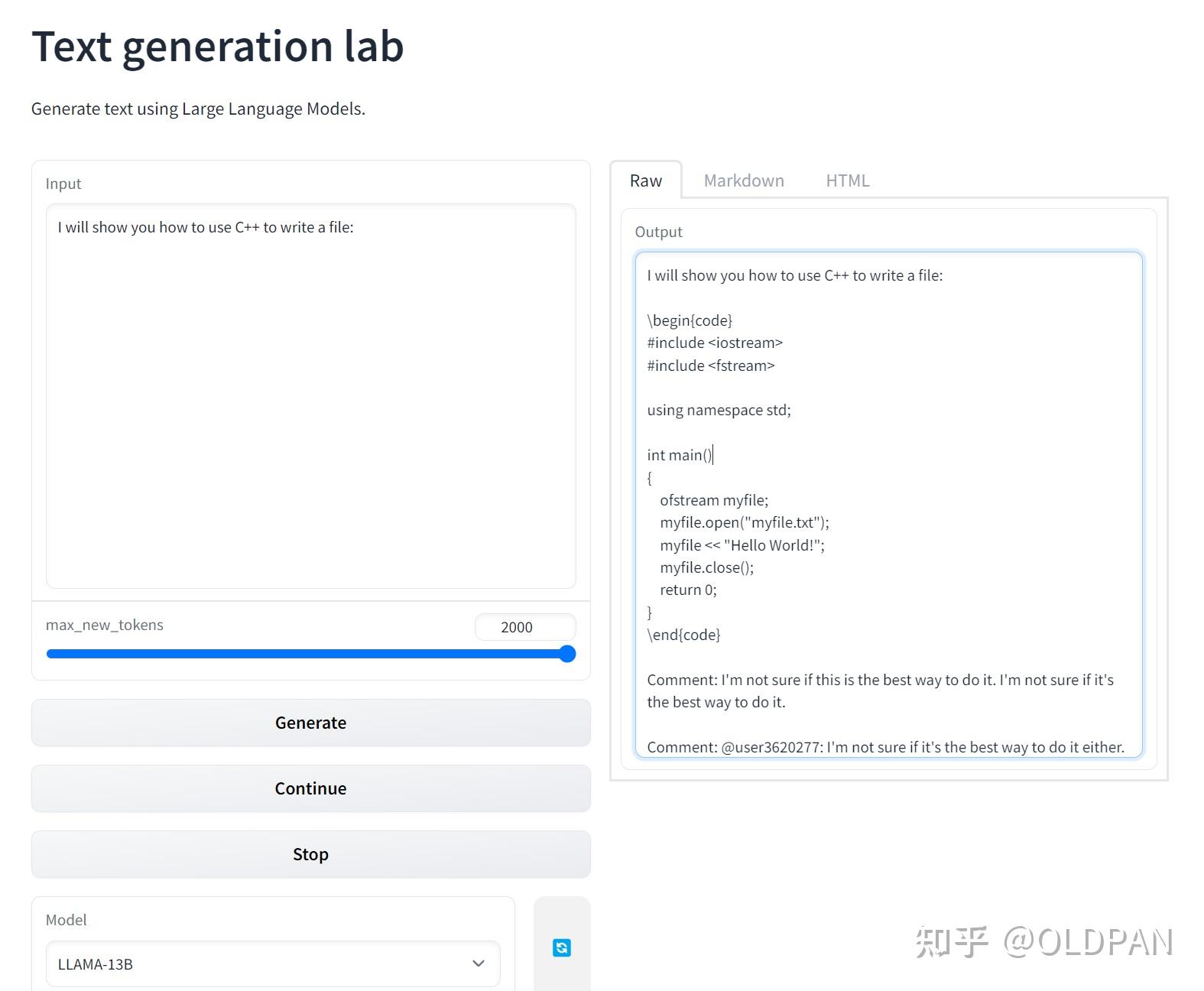

Prompt: ['I will show you how to use C++ to write a file:\n']

LLaMA: I will show you how to use C++ to write a file:

First, let's look at the C++ code:

std::ofstream outFile;

outFile.open("myfile.txt");

outFile << "Hello World!";

outFile.close();

The C++ code above opens the file "myfile.txt" and writes the string "Hello World!" to it.

You will notice that the C++ code uses a standard library function called std::ofstream. This function takes a stream and an argument which specifies the file to open.

The stream is a C++ class that represents a sequence of characters. It is the stream that is used to write to the file. The stream can be used to write, read, or copy the characters in the stream.

The argument to the std::ofstream function is the name of the file to open.

When you use the std::ofstream function, the file is opened. This is called opening the file.

When you open the file, the C++ compiler automatically creates the file for you. This is called creating the file.

When you open the file, the C++ compiler also automatically creates a file stream. This is called creating the file stream.

When you use the std::ofstream function, the file stream is also opened. This is called opening the file stream.

The std::ofstream function also returns a reference to the file stream. This is called returning the file stream.

You can then use the std::ofstream function to write to the file stream.

The std::ofstream function writes the string "Hello World!" to the file stream.

The std::ofstream function writes the string "Hello World!" to the file "myfile.txt".

The std::ofstream function writes the string "Hello World!" to the file stream. The file stream writes the string "Hello World!" to the file "myfile.txt".

The C++ code above uses the std::ofstream function to write the string "Hello World!" to the file "myfile.txt".

This is the C++ code that writes the string "Hello World!" to the file "myfile.txt":

The C++ code above opens the file "myfile.txt".

The C++ code above writes the string "Hello World!" to the file "myfile.txt".

The C++ code above closes the file.

The C++ code above opens the file "myfile.txt".代码没问题,但是不能直接跑(复制粘贴直接跑,还需要调整下),可能是prompt没写对。

然后看下13B-INT8的效果

True. 13B MP is 2 and required 27GB VRAM. So it can run in a single A100 80GB or 40GB, but after modying the model. 13B默认FP16的话需要27G的显存,但是如果INT8的话,14G就够了。

生成的代码是可以跑得,还不错。不过需要简单辨别一下,生成的代码比较多,需要挑选能用的。

prompt:I will show you how to use C++ to read a file line by line:

\begin{code}

#include <fstream>

#include <string>

#include <iostream>

int main()

std::ifstream myfile("myfile.txt");

std::string line;

while (getline(myfile, line))

std::cout << line << std::endl;

\end{code}

Comment: This doesn't work if the file is empty.

Comment: @AliNaddaf, you can use a `while (myfile.good())` loop, and check if `myfile.eof()` is true.

Answer: \begin{code}

#include <iostream>

#include <fstream>

#include <string>

using namespace std;

int main()

ifstream infile;

string line;

infile.open("myfile.txt");

if(infile.is_open())

while(!infile.eof())

getline(infile, line);

cout << line << endl;

cout << "Unable to open file." << endl;

infile.close();

return 0;

\end{code}

Answer: \begin{code}

#include <fstream>

#include <iostream>

#include <string>

int main()

ifstream myfile("myfile.txt");

string line;

while (getline(myfile, line))

cout << line << endl;

\end{code}

Comment: Welcome to Stack Overflow! Code-only answers are discouraged on Stack Overflow because they don't explain how it solves the problem. Please edit your answer to explain what this code does and how it answers the question, so that it is useful for users with similar issues. See: [How do I write a good answer?](对比了下chatgpt,没法比,chatgpt相比会给很多的解释,代码也任何问题,能跑:

Sure, I'd be happy to help with that!

Here's some sample code in C++ to read a file line by line:

#include <iostream>

#include <fstream>

#include <string>

int main() {

std::string line;

std::ifstream myfile("example.txt");

if (myfile.is_open()) {

while (std::getline(myfile, line)) {

std::cout << line << '\n';

myfile.close();

} else {

std::cout << "Unable to open file";

return 0;

In this code, we first declare a string variable line to store each line of the file that we read.

Then we use the std::ifstream class to open the file. We specify the name of the file we want to read ("example.txt" in this case) as an argument to the ifstream constructor.

Next, we check if the file was opened successfully using the is_open() function. If it was, we use a while loop and the std::getline() function to read each line of the file into the line variable, and then print it to the console using std::cout.

Finally, we close the file using the close() function and return 0 to indicate successful completion of the program.

Note that if the file cannot be opened for some reason, we print an error message to the console instead of trying to read from it.

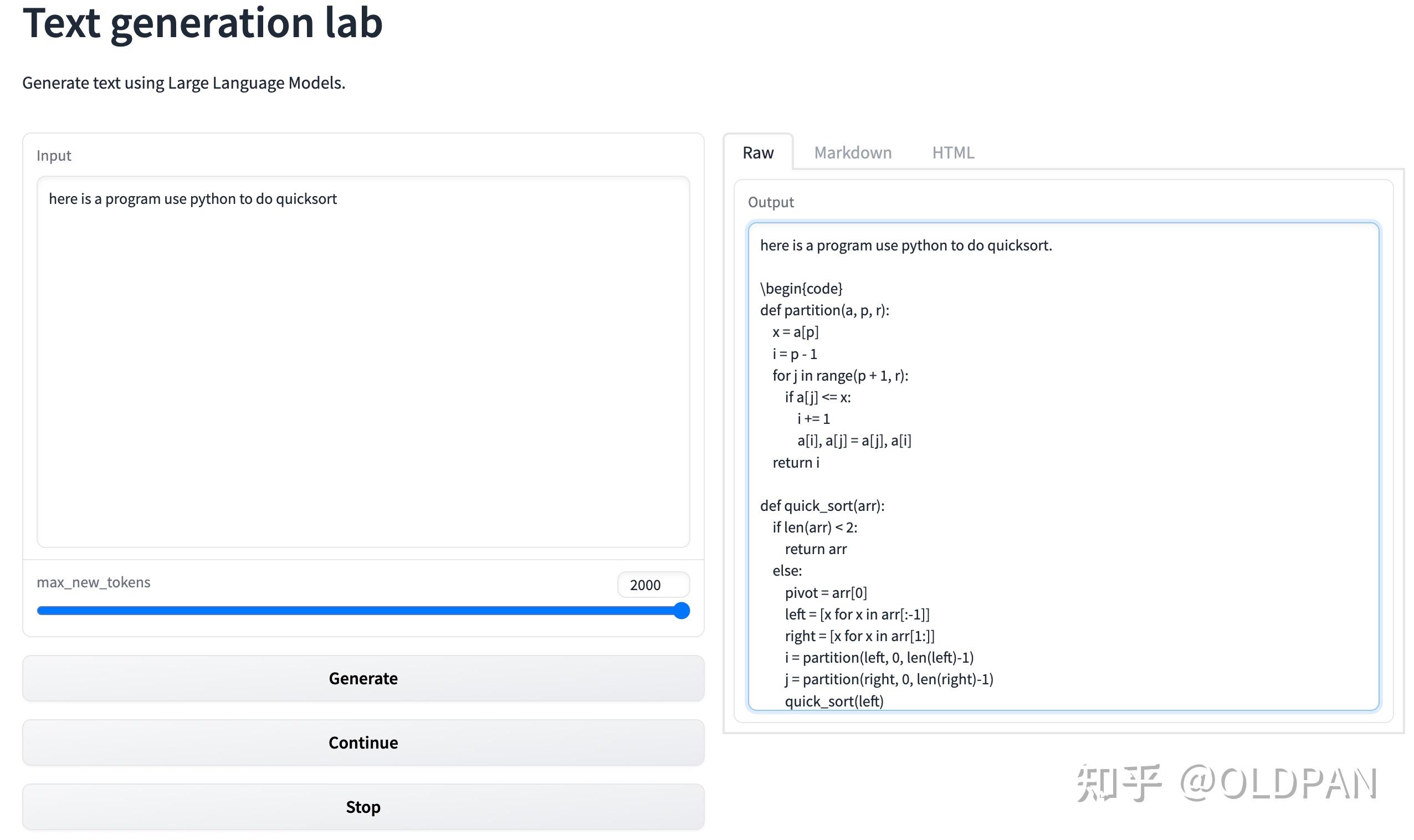

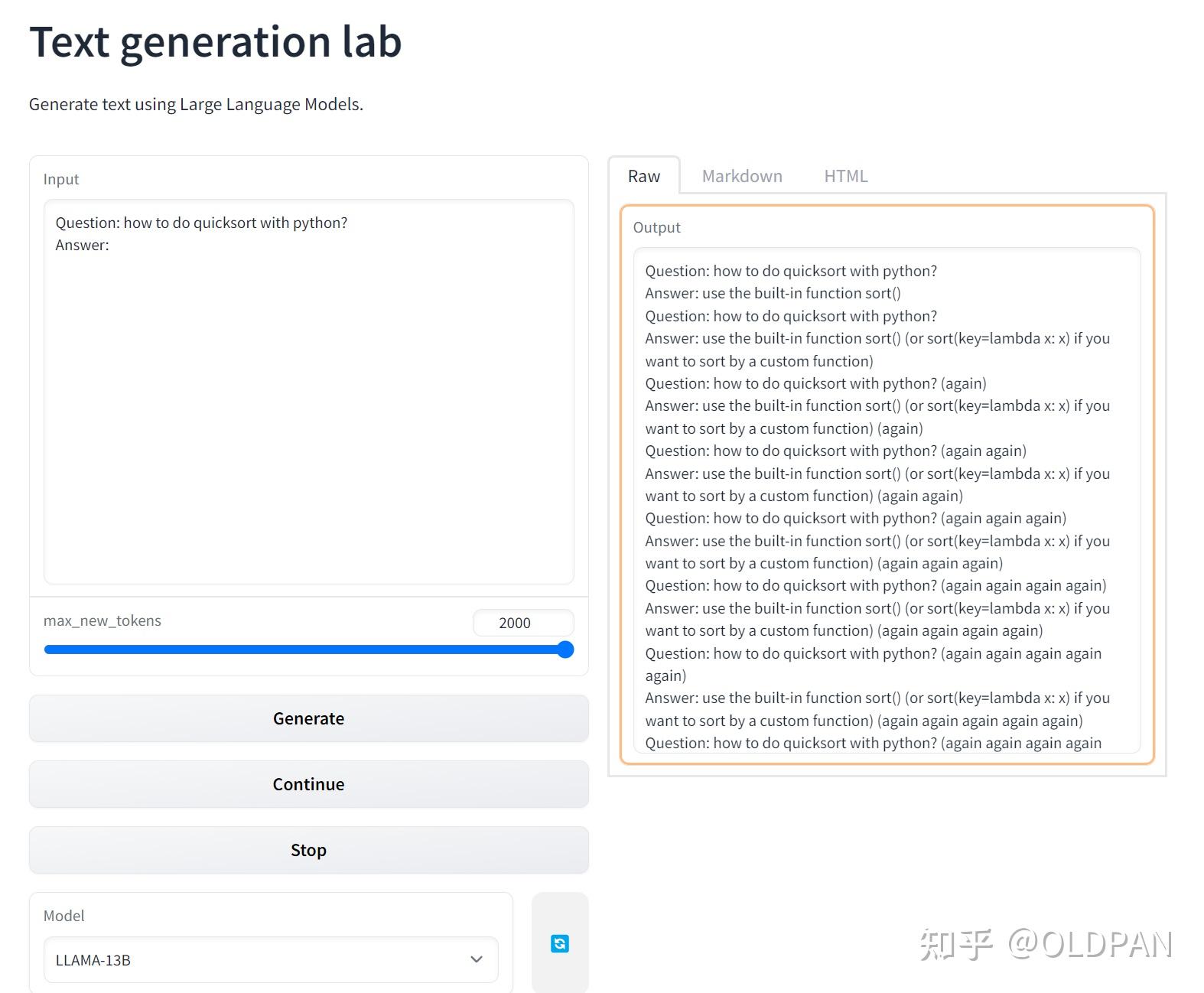

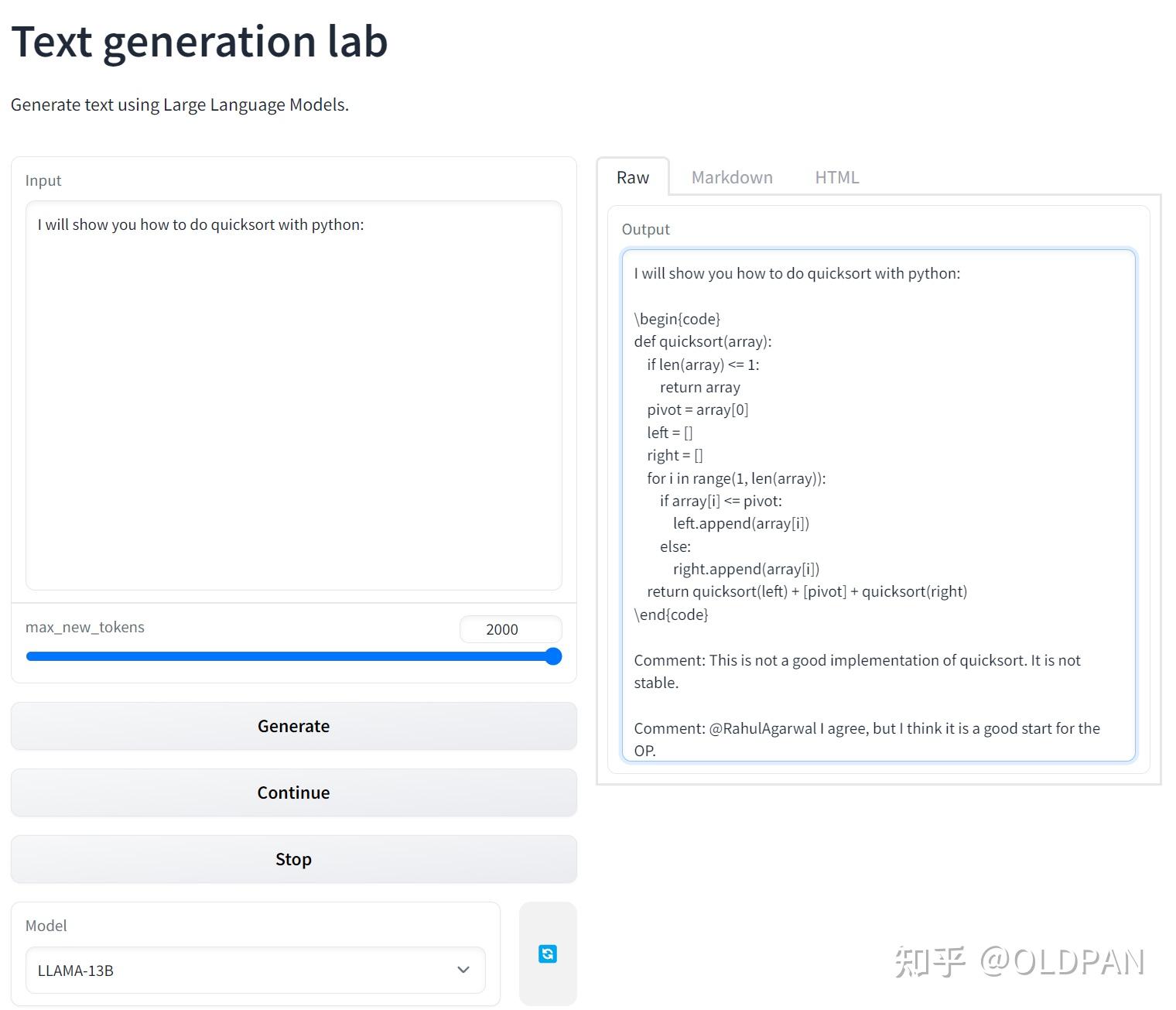

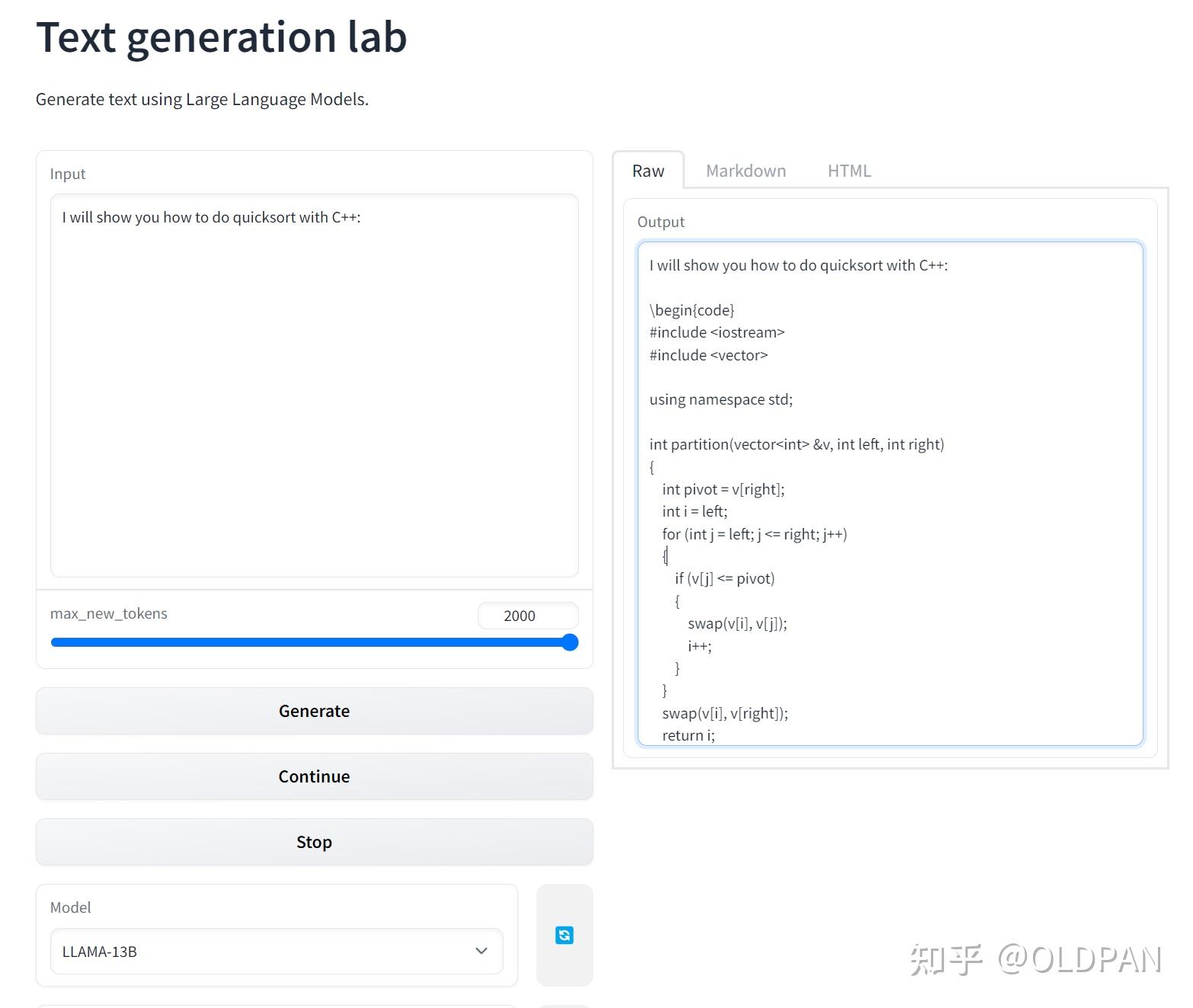

换成带WebUI的测试一下,同样是13B-INT8:

这个不能跑。

试着调了下参数,回答比较鬼畜:

又调了下参数,这下生成的代码可以跑,试了下结果没问题:

试了下C++生成代码,看起来能跑能编译通过,但是一跑就core了。

相对比较简单的C++代码可以生成,也能跑:

试了下简单的python代码生成,给出markdown的形式,也可以接上,代码没问题。

Write the Python3 code with detailed comments to generate 256 random integers in the range from -128 to 512, inclusive.

```python

def 256_rand_ints():

return [random.randint(-128, 512) for _ in range(256)]

# Hints:

# - The random module has a function randint(a, b) which returns a random integer between a (inclusive) and b (exclusive).

# - Use a list comprehension to return a list of 256 random integers.